Retrieval-Augmented Generation (RAG)

Retrieval-Augmented Generation (RAG) Supercharging Generative AI with Real-Time, Contextual Intelligence

Generative AI powered by large language models (LLMs) has transformed the way organizations interact with data. From drafting emails to answering complex questions, LLMs have demonstrated remarkable fluency and versatility. However, these models have an inherent limitation—they only know what they were trained on. This training data, no matter how vast, can quickly become outdated or lack relevance to a specific business context.

Enter Retrieval-Augmented Generation (RAG)—a groundbreaking technique that bridges the gap between the static knowledge of LLMs and the dynamic, evolving world of enterprise data.

Why Traditional LLMs Fall Short

- Outdated Information: LLMs don’t have access to the most recent events or developments.

- Lack of Specificity: They cannot answer company-specific questions about products, services, or internal operations.

- Inflexibility: Retraining an LLM to include new data is expensive and resource-intensive.

What Is Retrieval-Augmented Generation (RAG)?

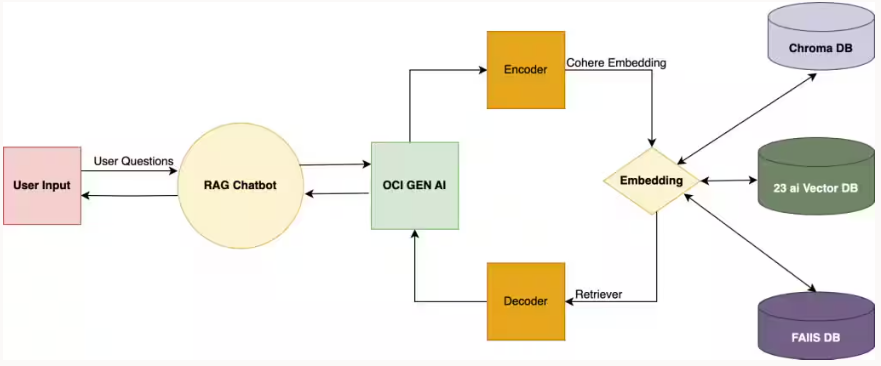

RAG is an AI architecture that enhances the performance of LLMs by integrating real-time, domain-specific information. Instead of retraining the base model, RAG allows the LLM to retrieve relevant documents or data from a constantly updated knowledge base and generate context-rich responses based on that data.

First introduced in a 2020 paper by Facebook AI Research, RAG has since gained momentum across academia and industry for its ability to combine the best of generative and retrieval-based approaches.

These limitations can result in incorrect or vague responses, especially in customer-facing applications—undermining trust in the AI system.

How RAG Works

- Data Collection: All relevant data—structured (like databases) and unstructured (like PDFs, news feeds, customer chats)—is collected and normalized.

- Vectorization: This data is transformed into vector embeddings using language models. These embeddings represent the semantic meaning of the data.

- Vector Database: The embeddings are stored in a vector database, which can be searched using a user’s query, also converted into a vector.

- Retrieval: The system retrieves the most relevant pieces of information in real-time.

- Generation: These retrieved documents, along with the original prompt, are fed into the LLM to generate a final response.

This enables the AI to produce timely, accurate, and context-aware answers, even when the base model itself is not updated.

Real-World Example: RAG in Sports and Media

RAG vs. Semantic Search

While both RAG and semantic search improve AI accuracy, they serve different purposes:

- Semantic Search helps find the most relevant documents by understanding the meaning behind a query.

- RAG takes this a step further by generating human-like responses using those documents.

- Semantic search is often a core component within a RAG pipeline—it ensures the retrieved context is semantically aligned with the user’s intent, which the LLM can then use to generate a meaningful reply.

The Role of RAG in Chatbots

Chatbots are a natural fit for RAG technology. Users expect fast, relevant, and contextually correct answers. But most chatbots are limited to pre-defined “intents” and can’t handle nuanced questions about new or rare topics.

With RAG, chatbots gain the ability to:

- Answer questions about new products or services not included in the original training data.

- Provide localized or time-sensitive information (e.g., “Is the hiking trail open this Sunday?”).

- Support employees with personalized internal data (e.g., benefits, policies, workflows).

Benefits of Retrieval-Augmented Generation

RAG introduces a host of advantages over traditional generative AI:

- Fresh and Dynamic Knowledge: Updates can be added continuously without retraining the LLM.

- Contextual Relevance: Data repositories can be tailored to specific domains, enhancing accuracy.

- Source Transparency: RAG can cite specific data sources used in generating answers, aiding traceability and accountability.

- Error Correction: Faulty data can be identified and replaced, ensuring continuous improvement.

Challenges of RAG Implementation

- Newness and Learning Curve: As a relatively recent innovation, RAG requires technical teams to develop expertise in its design and operation.

- Infrastructure Requirements: Maintaining a vector database and retrieval engine demands additional resources.

- Data Modeling Complexity: Organizations must carefully structure and embed both structured and unstructured data.

- Monitoring and Maintenance: Systems must be in place to correct or remove inaccurate data.

Use Cases and Applications

RAG is being used across industries to deliver tailored and trustworthy responses:

- Customer Service: Review support transcripts and knowledge bases to give real-time, accurate responses.

- Healthcare: Search through medical journals and research to help clinicians find relevant studies.

- Energy: Analyze geological and drilling data for oil and gas discovery.

- Finance: Interpret financial reports, investor filings, and market news to guide decision-making.

- Travel: Chatbots that answer location-specific questions like beach safety or nearby amenities.

The Future of RAG

- A vacation planner bot could recommend and book the highest-rated beach resort based on real-time reviews, availability, and user preferences.

- An internal HR assistant could recommend education programs aligned with an employee’s goals—and automatically start the tuition reimbursement process.

As RAG evolves, it will blur the lines between information retrieval and decision-making—creating systems that are not only knowledgeable, but proactive.

Conclusion

Consult Us

Download Case Study

Feedback

Wait a moment

Oops! Something went wrong!