Have you ever thought about building your own large language model (LLM) for a custom task something magical that understands your world perfectly? Many of us have had that spark of inspiration at some point. The idea of creating an AI that speaks your language, follows your workflows, and responds just the way you need it to it’s exciting.

But then comes reality:

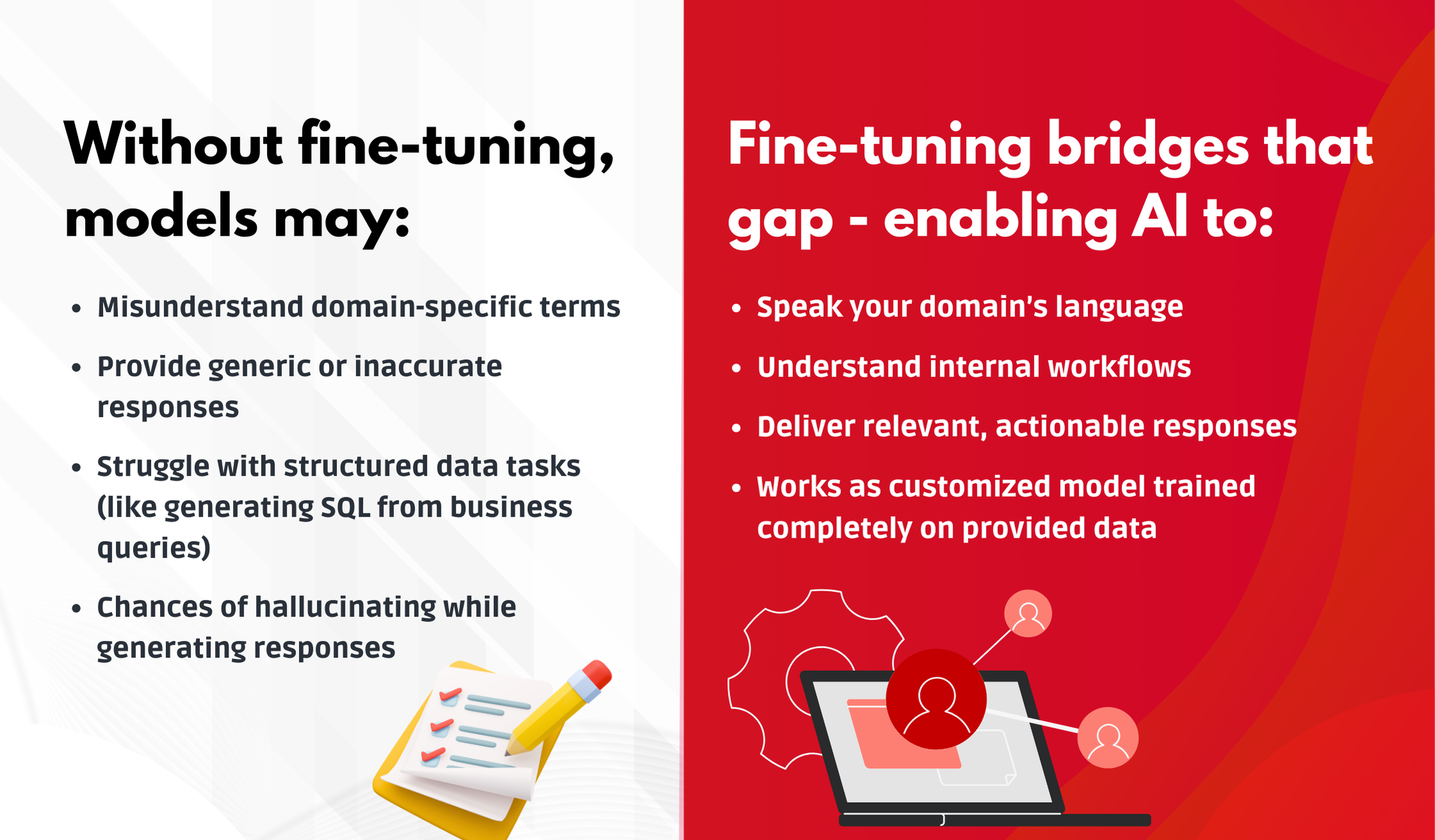

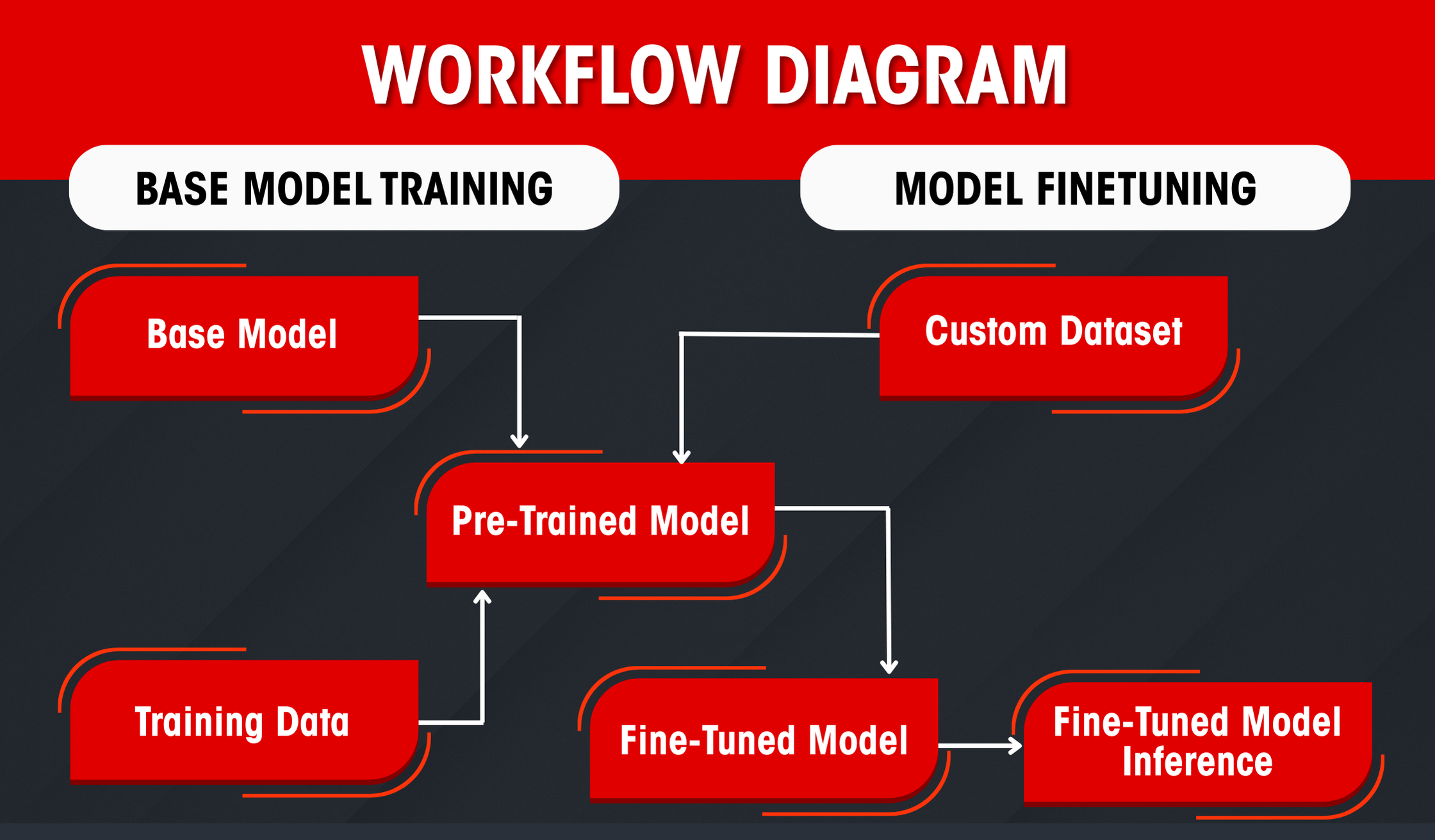

Building an LLM from scratch is complex, time-consuming, and resource intensive. For most teams, the dream fades quickly. That’s where fine-tuning changes everything. Instead of starting from zero, what if you could take a powerful, pre-trained model and teach it your domain, your data, your goals? Fine-tuning makes that possible. It’s like customizing the brain of a super-intelligent assistant so it understands you.

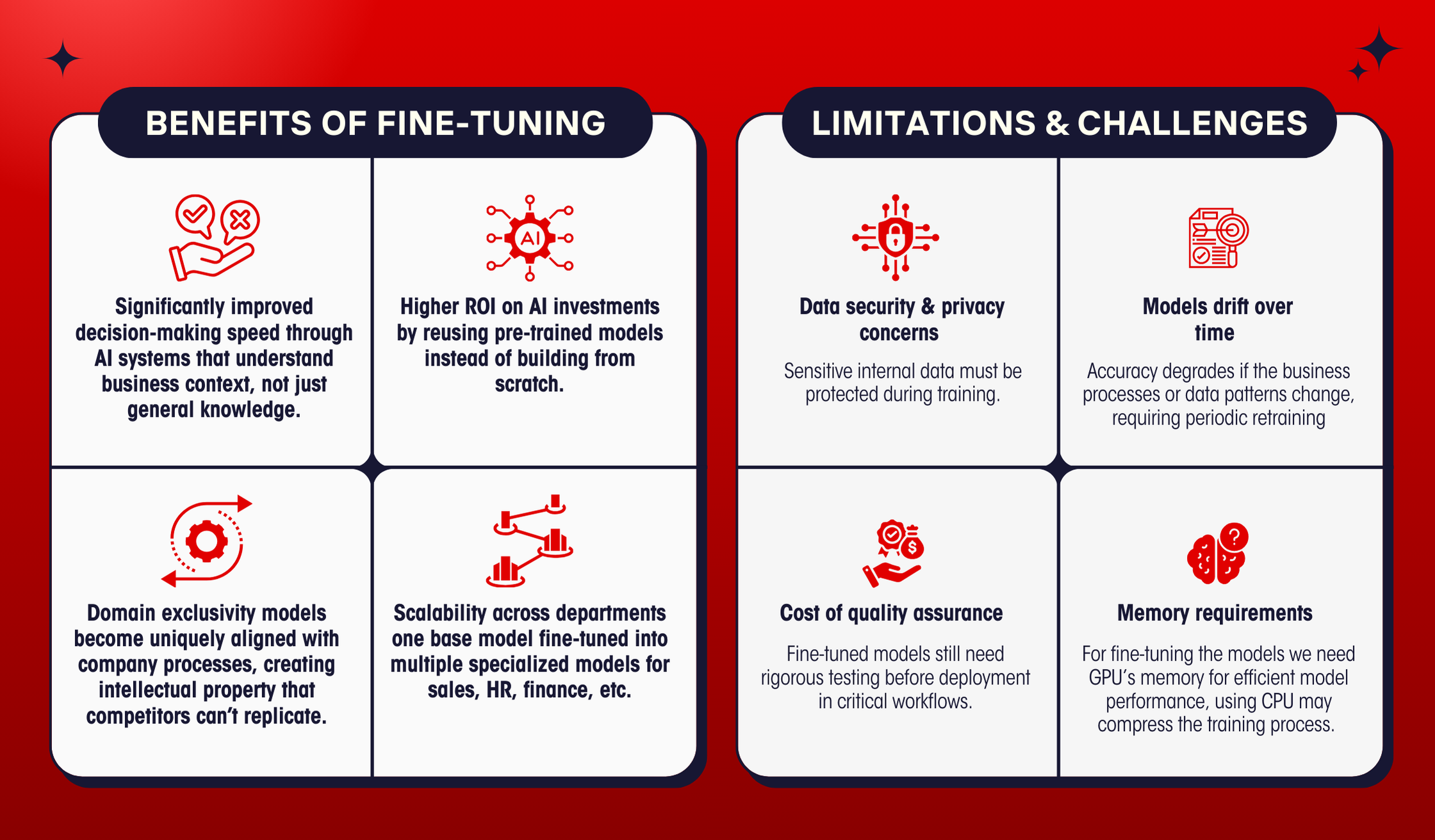

Artificial Intelligence is powerful, but to truly make it work for us our domain, our language, and our users we need more than just out-of-the-box solutions. That’s where fine-tuning comes in. Think of it as teaching an AI model not just general knowledge, but your company’s language, systems, and goals. In this article, we’ll walk through what fine-tuning means, why it matters, how we use it, and where it fits into the bigger picture of applied AI.

Fine-tuning is the process of taking a pre-trained AI model (like GPT, T5, or BERT) and retraining it on a smaller, task-specific dataset. This helps the model specialize in understanding specific domains, jargon, and patterns relevant to a business or use case.

It’s like hiring a smart new team member they already know a lot, but you still need to train them to follow your processes and use your vocabulary.

Let’s say while working on a natural language task — for example, converting plain English into a SQL query using a large language model (LLM).

Now imagine the prompt is

“List the completed orders in the past month.”

A general-purpose LLM might return a syntactically correct SQL query because it understands SQL structure and grammar. However, it won’t necessarily return a semantically correct or executable query. Because the model doesn’t know the schema of your database it doesn’t know:

In this case the sample output we may get is,

“SELECT * FROM orders WHERE status = ‘completed’ AND order_date >= DATE_SUB (CURDATE (), INTERVAL 1 MONTH);”.

In this case, the model interpreted “status” as a column and “completed” as a value, but it was unclear whether the table name was “orders” or “sales_orders.” This highlights the ambiguity in selecting table names, column names, attributes, and values.

The structure is correct, but this query fails.

This is where fine-tuning comes in and where different techniques help you train the model to speak your language, learn your schema, your vocabulary, and your logic to generate not just correct code, but context-aware, business-ready solutions.

Now let me walk through you with the few fine-tuning techniques how actually helps us in fine-tuning tasks,

Public Security

Mineral Explore

Aerial Photography

Movie Production

Help Center

Ticket

FAQ

Contact

Community

Help Center

Ticket

FAQ

Contact

Community

[ninja_form id=2]